Blog

Accelerating Unit Testing using Generative AI

Navigation

Navigation- Introduction

- The Importance of Gen AI-Based Unit Testing in Code Quality Assurance

- A Framework for Gen AI-Based Unit Test Case Generation

- Implementation of Gen AI-Based Unit Test Framework

- Outcome: Real-World Application of Gen AI-Based Unit Test in Automotive Systems

- Key Benefits of the Framework

- Conclusion

- References

Introduction

Unit testing is a crucial step in reliable software development, ensuring that each functional unit of the source code performs as expected. As software systems grow in complexity, maintaining high code coverage in software testing becomes increasingly vital to find bugs early during development. However, writing comprehensive unit tests is often a time-consuming and error-prone process, especially under tight deadlines. Generative AI (GenAI) proves to be valuable in this context. By automating the creation of unit test cases, GenAI not only accelerates the development process but also enhances the quality of the tests, leading to more robust and resilient software. With GenAI, developers can focus on innovation, knowing that their code is well-guarded against failures.

The Importance of Gen AI-Based Unit Testing in Code Quality Assurance

Unit testing [1] is a software testing method that involves validating individual functional units of a software application to ensure that they function correctly. Each unit is tested in isolation from the rest of the source code to verify its behaviour under various conditions, making it easier to pinpoint bugs early in the development cycle. To check the effectiveness of unit testing, developers rely on a metric called code coverage, which measures the extent to which the source code is executed during testing. Code coverage reflects the percentage of the codebase that has been tested, providing information on which parts have been verified and which parts are untested. High code coverage generally correlates with lower risk, but achieving this can be challenging, especially in large and complex software systems.

The traditional approach to unit testing involves developers manually writing the unit test cases after they understand the requirements. Tools such as Unittest, Pytest, and Nose in Python, or Google Test (GTest) and Boost Test in C/C++, facilitate the structuring and execution of unit tests. However, crafting each unit test case remains labour-intensive [2]. Developers must account for various inputs and edge cases, making comprehensive test coverage difficult to achieve. Generating the full spectrum of possible inputs for a function and determining the corresponding expected outputs, or ground truth, is a manual process now. This typically involves interpreting the function's requirements from documentation, manually devising a range of input scenarios, and then computing the outputs to be used as expected results. As codebases evolve, the number of inputs and outputs increases exponentially. This rising complexity increases the risk of reduced test coverage, potentially leaving critical edge cases untested. These challenges demand automated and efficient unit test case generation.

GenAI [3], a class of artificial intelligence powered by Large Language Models (LLMs), can generate new content based on the data it has been trained on. It has proven to be a valuable asset in software development [4]. LLMs such as OpenAI's GPT [5], Google’s Gemini [6], etc. have been trained on vast datasets, enabling them to generate contextually relevant content, including code snippets, documentation, and even unit test cases.

By leveraging GenAI, developers can accelerate the unit testing process [7, 8]. Instead of manually writing every test case, one can use GenAI to generate a broad range of test scenarios, including those that might be overlooked. This not only saves time but also enhances the thoroughness of testing, improving code coverage and reducing the likelihood of bugs in the codebase. As a result, GenAI helps developers maintain high standards of software quality while easing the burden of manual test case creation.

This white paper explains the GenAI-based unit testing framework developed by TATA ELXSI. This framework utilizes GenAI to:

- Generate unit test cases directly from the input textual software requirements to maximize code coverage

- Execute the generated unit test cases, and

- Generate the test reports and code coverage reports.

A Framework for Gen AI-Based Unit Test Case Generation

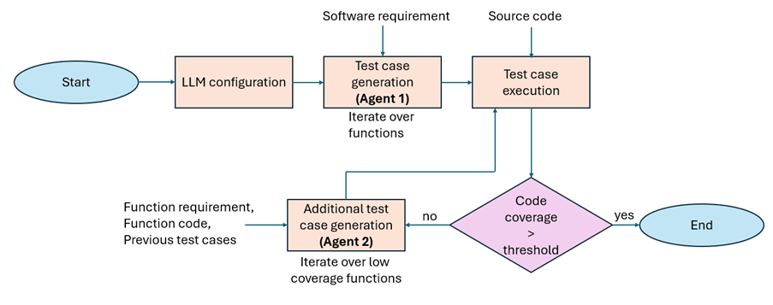

The proposed framework is shown in Figure 1. It involves a GenAI agent to automate the generation of unit test cases in the desired programming language from the given software requirements of various functions in an application. The generated test cases are executed on the source code of the application using the appropriate tool. Depending on the code coverage threshold set, additional unit test cases are generated from the requirements by using another GenAI agent iteratively.

Figure 1: Proposed Gen AI-based Unit Test Framework

The inputs to the framework are the software requirements of functions and the source code. The framework is therefore structured into three stages: Unit test case generation, test case execution, and code coverage improvement. Each of these stages is elaborated below:

1. Unit test case generation

The first stage in the framework involves invoking a GenAI agent (referred to as Agent 1) specifically tasked with generating unit test cases. This agent is configured to work with a LLM, accessed via API integration. The role of the agent is to understand the input software requirements of a functional unit and then generate comprehensive unit test cases in the desired programming language. The agent is also instructed to create robust and compilable unit test cases. The description of the role is crafted as a prompt to the LLM along with the software requirements of a function. For every function, the agent generates the corresponding unit test cases and then repeats this for all the functions. For each function under test, multiple unit test cases are generated to cover positive, negative, and edge scenarios. The test cases also cover the full range of input variables. For every set of input values, GenAI uses the software requirements to compute the expected outputs, ensuring thorough validation of the function's behaviour. The generated unit test cases are cleaned and saved in an appropriate file with extensions matching the target programming language.

2. Test case execution

The second stage focuses on compiling and executing the generated unit test cases on the corresponding source code using an appropriate testing tool. The tool checks whether the test cases pass or fail. This reporting helps in identifying any discrepancies between the expected and actual behaviour of the code. In addition to this, the framework assesses the code coverage of each function. The low code coverage indicates that the generated unit test cases are not sufficiently comprehensive.

3. Code coverage improvement

The final stage involves a second GenAI agent (referred to as Agent 2), whose role is to generate additional unit test cases to improve the code coverage. Agent 2 is designed to analyze the function code, code coverage report, software requirements, and previously generated test cases to identify the code coverage gaps. The agent is instructed to avoid duplicating existing test cases and to focus on creating unique unit test cases that can address uncovered scenarios. Additional unit test case generation is repeated until the desired code coverage is achieved for each function. In the proposed framework, these test cases are generated iteratively until a predefined code coverage threshold is reached. Once the additional unit test cases are generated, they are then appended to the initial unit test case file, thereby enriching the test suite with more comprehensive code coverage.

Both agents (Agent 1 and Agent 2) are integrated into a multi-agent framework. This framework coordinates the agents' activities, ensuring seamless communication and collaboration between them. The result is a highly automated, iterative approach to unit testing that significantly reduces manual effort while maximizing code coverage and test reliability.

Implementation of Gen AI-Based Unit Test Framework

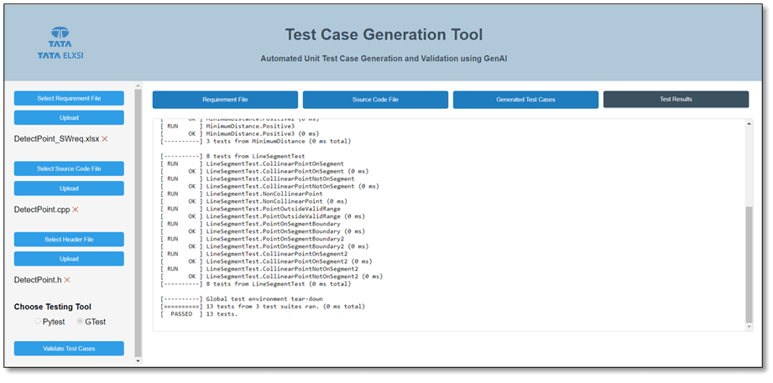

The framework, written in Python, was tested for source codes in Python and C/C++. Google’s AI model, Gemini, was used as the LLM, integrated with CrewAI for developing the agents. The Python framework in the backend is integrated with the frontend user interface developed using React. In the CrewAI multi-agent framework, the tasks and expected outputs of two agents were detailed in prompts. The Python framework running in the backend is connected to the frontend user interface as shown in Figure 2. It allows the user to upload and view the software requirements, source code, and header files. The user can select the appropriate testing tool (GTest/Pytest). The generated unit test cases and the test results are also displayed in the interface.

Figure 2: User Interface of the Gen AI-based Unit Test Tool

Outcome: Real-World Application of Gen AI-Based Unit Test in Automotive Systems

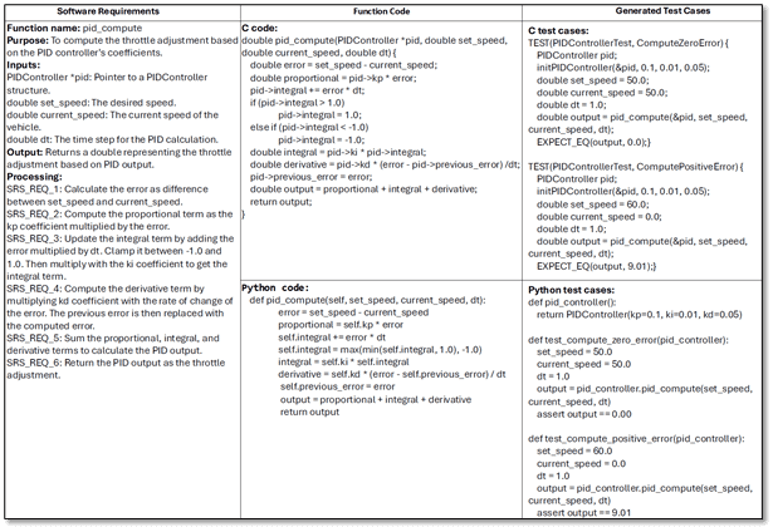

The accuracy of the implementation was tested on automotive system requirements. A PID speed controller that generates a throttle signal to minimize the error between set speed and actual speed is considered an example here. The software requirements for the PID controller function, its corresponding source code in C and Python, and the generated unit test cases are given in Figure 3 below. Full code coverage was achieved as the second agent iteratively generated the missing test cases in Python or C based on the language selected. When the test cases were executed along with the source code, all test cases passed successfully.

Figure 3: Sample of Input requirements, source code and generated test cases

Key Benefits of the Framework

The benefits of the proposed GenAI-based unit test case generation framework over manual process are in terms of time, cost, effort savings and also error-free implementation.

In the manual process of unit test case development, engineers write unit test cases based on their interpretation of software requirements. This step requires a thorough understanding and often involves significant human effort to ensure accuracy and coverage. Test case generation can take days or even weeks, depending on the complexity and size of the project. It requires continuous attention and input from developers and testers. Overall, a manual process is time-consuming and prone to errors. Significant time is spent across all stages, leading to delays in release cycles, especially in projects with frequent requirement changes.

In the proposed framework, the LLM automatically generates the unit test cases from the requirements. GenAI can generate test cases in a matter of minutes, drastically reducing the time spent in the test development phase. The GenAI-based unit testing process can reduce the entire testing cycle from weeks to hours, offering more than a 60% reduction in time/effort/cost consumption compared to the manual approach.

Conclusion

The application of GenAI for generating unit test cases shows a significant advancement in the automation of software testing. While the current framework is designed for generating test cases in C/C++ and Python, its design is flexible and can easily be extended to support other programming languages.

Tata Elxsi, with its extensive experience in automotive software development and GenAI, this automated approach has significantly accelerated the software development lifecycle. The integration of GenAI into our testing workflow not only speeds up software testing but also improves product delivery timelines and quality, ultimately leading to more reliable products and faster time-to-market. Please contact us if you need support in integrating GenAI-based unit testing for your projects.

References

- Per Runeson, “A survey of unit testing practices”, IEEE Software, vol. 23, no. 4, July 2006.

- Ermira Daka and Gordon Fraser, ”A survey on unit testing practices and problems”, Proceedings of the 25th IEEE International Symposium on Software Reliability Engineering, pp. 201-211, November 2014.

- K. S. Kaswan, J. S. Dhatterwal, K. Malik and A. Baliyan, "Generative AI: A Review on Models and Applications," 2023 International Conference on Communication, Security and Artificial Intelligence (ICCSAI), Greater Noida, India, pp. 699-704, 2023.

- Jianxun Wang and Yixiang Chen, “A review on code generation with LLMs: Application and evaluation”, Proceedings of the IEEE International Conference on Medical Artificial Intelligence, November 2023.

- OpenAI’s GPT: https://openai.com/index/gpt-4/

- Google’s Gemini: https://gemini.google.com

- Junjie Wang et al., “Software testing with large language models: Survey, Landscape and vision”, IEEE Transactions on Software Engineering, vol. 50, no. 4, April 2024.

- Shreya Bhatia et al., “Unit Test Generation using Generative AI : A Comparative Performance Analysis of Autogeneration Tools”, Proceedings of the ICSE Workshop on Large Language Models for Code, Lisbon, Portugal, April 2024.